The Origins of Intelligence Testing

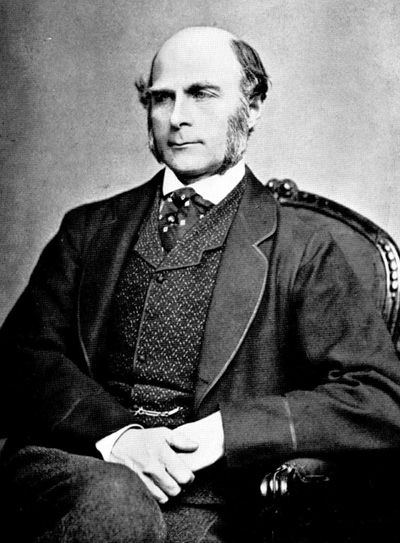

Throughout the centuries, various theories about intelligence and methods for measuring it have been proposed. In the 19th century, Sir Francis Galton, a cousin of Charles Darwin, conducted research on human intelligence and developed the concept of intelligence quotient (IQ). He believed that intelligence was inherited and that it could be measured through sensory perception, memory, and other mental abilities.

In the early 20th century, French psychologist Alfred Binet was asked by the French government to develop a test to identify children who were not performing well in school. Binet and his colleague Theodore Simon developed the first modern intelligence test, known as the Binet-Simon Scale. This test was later revised by Lewis Terman, a psychologist at Stanford University, who developed the Stanford-Binet test, which is still in use today.

Intelligence testing has faced criticism and controversy over the years, with some arguing that it is culturally biased and does not accurately measure all aspects of intelligence. Despite these criticisms, intelligence tests continue to be widely used in education, employment, and other settings to assess cognitive abilities and to identify individuals who may need additional support or resources.

The Development of the Stanford-Binet Test

The Stanford-Binet test measures various cognitive abilities, including logical thinking, problem-solving, and spatial awareness. It consists of a series of tasks and questions that are designed to assess an individual’s intelligence level. The test is often administered to children, but it can also be used to evaluate the intelligence of adults.

The Stanford-Binet test has undergone several revisions since it was first developed. The most recent version, published in 2003, is known as the Stanford-Binet Fifth Edition (SB5). The SB5 includes several additional subtests and an updated scoring system that takes into account the age of the test-taker.

Despite its widespread use, the Stanford-Binet test has faced criticism over the years. Some critics argue that it is culturally biased, as it tends to favor individuals who are familiar with Western culture and values. Others argue that it is not an accurate measure of intelligence, as it only assesses certain cognitive abilities and does not take into account other factors that may influence intelligence, such as creativity, emotional intelligence, and motivation.

Despite these criticisms, the Stanford-Binet test remains a popular and widely used intelligence test. It is often administered in schools, workplaces, and other settings as a way to assess an individual’s cognitive abilities and potential for learning and development.

Intelligence Testing in Modern Times

One of the key features of modern intelligence testing is that it is designed to be more objective and standardized than previous versions of intelligence tests. This means that the tests are designed to be administered in the same way to all individuals, regardless of their background or cultural differences. This allows for a more accurate assessment of an individual’s intelligence and helps to eliminate bias in the testing process.

One of the main criticisms of intelligence testing in modern times is that it can be culturally biased. This means that the tests may be more geared towards individuals from certain cultural backgrounds, leading to a potential disadvantage for those who are not from those backgrounds. Despite this criticism, intelligence tests are still widely used in a variety of settings, including education, employment, and even military selection.

There is ongoing debate about the usefulness and validity of intelligence testing in modern times. Some argue that intelligence tests are a useful tool for assessing an individual’s cognitive abilities and potential, while others argue that they are overly simplistic and do not accurately reflect an individual’s true intelligence. Ultimately, the use and interpretation of intelligence tests will depend on the specific context in which they are being used.

The Future of Intelligence Testing

Another possibility is the increasing use of artificial intelligence (AI) in intelligence testing. AI algorithms can analyze large amounts of data quickly and accurately, and could potentially be used to identify patterns and trends in test results that humans might not be able to detect. However, there are also concerns about the potential for bias in AI systems, and the need to ensure that they are transparent and fair in their assessments.

Another trend in intelligence testing is the shift towards more holistic and comprehensive approaches that take into account not just cognitive abilities, but also non-cognitive factors such as social and emotional intelligence. These more comprehensive measures may be more predictive of real-world success and may provide a more accurate picture of an individual’s overall potential and abilities.

Regardless of the direction intelligence testing takes in the future, it is important to continue to question and critically evaluate the assumptions and methods underlying these tests. Intelligence is a complex and multifaceted concept, and no single test can capture all of its dimensions. By staying attuned to the latest research and advances in the field, we can continue to improve and refine the ways in which we measure and understand human intelligence.

Read more on how IQ tests are scored…